I have ventured into the world of Isovalent since the Cisco Acquisition. The following is the start of my new lab where I will be integrating Kubernetes and Cilium with a Cisco NXOS VXLAN / EVPN Fabric.

The intention here is to practice Cilium networking whilst highlighting some of the specific benefits of a design such as this, re resiliency, scalability, security and observability.

Specifically highlighting the multi-cloud use cases alongside network policies (micro-segmentation) in Cilium and the level of observability that Hubble in Cilium provides.

I highly recommend starting with the Isovalent Cilium labs which have been a fantastic start in all things Cilium and Kubernetes for me.

The Lab

To start with an outline the resources used in my lab:

- Ubuntu Server 24.0.3 LTS

- VMWare Workstation 17 Pro 17.6.4 build-24832109

- Cisco Modelling Labs 2.8.0 (Ubuntu 24.04.1 LTS)

The lab was initially fully built in CML 2.8.0 using Ubuntu Images, however I had issues with the Controlplane communication after initialising the podCIDR which led me to a potential Kernel compatibility issue.

This issue and hours of fault finding pushed me to a hybrid lab utilising Ubuntu Server VMs for K8s and Cilium, alongside my Cisco NXOS Spine and Leaf within CML using a VMNET to bridge connectivity between the two.

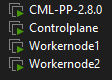

Currently the Initial build consists of Three Ubuntu Server VMs and my CML Lab. Within the CML lab I have three Nexus 9Ks, an L2 switch (Replicating a bond to Ubuntu) and an external connector.

The external connector is used for my inter CML and Ubuntu VM VMNET.

The Ubuntu VMs are also bridged to my internal home lab network which enables management connectivity for inter-node connectivity, required to build out the K8s cluster.

The Design

Firstly the VM Network Design below. The Inter-VM network shows connectivity between CML and our Ubuntu VMs via VMNET10 or (Bridge1 in CML).

The Management network is my internal homelab network which has internet access via BRIDGE0.

Secondly the NXOS to Cilium Design below. We can see the external connector in CML which is mapped to Bridge1 (VMNET10) provides connectivity between the Ubuntu VMs running Kubernetes, and the CML Nexus devices on the Inter-VM Network. This portion of network will be utilised for Native Routing and eBGP advertisements of PodCIDR and Service IPs.

The IOL-L2-0 is in place purely to replicate some LACP to allow the configuration of our Nexus VPC. In reality the Leaf and Ubuntu servers would be directly connected with Bond0. (I deemed adding more external connectors and trying to Bond the Ubuntu interfaces as too much complexity and added the IOL-L2-O as a lab work around).

Thirdly the K8s Cluster Design below, PodCIDR is 172.16.0.0./16. The Watersource PODs have been replicated across Workernodes. The PODs utilise a /24 from the /16 range.

Workernode1 uses 172.16.1.0/24 and Workernode2 is using 172.16.2.0/24.

Finally the Routing Design below, this wasn’t a straight forward design but is highly redundant.

iBGP between the Leaf switches within the VPC pair (This allows eBGP peering over the VPC peerlink). The iBGP only advertises the local loopback. The loopback should not be advertised into L2VPN EVPN.

Anycast Gateway from within the INTER-VM network is being used as the next-hop to reach our local loopbacks. This is also used as our Native Routing subnet.

eBGP peering is between the NodeIPs from within the INTER-VM network and the local loopbacks on the Leaf switches.

PodCIDR is being advertised via eBGP to our Nexus Spine and Leaf, note by default only the /24s in use on the Workernodes are advertised, not the wider /16.

On the next page i’ll be diving into the K8s build, Cilium build and NXOS build.

Leave a comment