All credit for my base Kubernetes build is down to a recent post I found on Medium which has been extremely helpful. The only difference is the CNI listed in the guide is Calico and I am using Cilium (See next Page). I’ll be sharing the full process I have taken, based on the following guide.

Kubernetes Cluster Setup on Ubuntu VMs (Home Lab Guide for Beginners) | by Rushain Sovis | Medium

Kubernetes build on Ubuntu Server VMs

The VM builds are out of scope, this will cover installing Kubernetes. It is expected the Ubuntu VMs have internet access and DNS is configured.

To add, I don’t honestly know what a lot of these commands do (In this section at least) I’m a network engineer and Kubernetes has been a learning curve to say the least.

This has taken a lot of time with trial and error. The following should be configured on all three of the Ubuntu VMs, the Controlplane, Worknode1 and Workernode2 unless otherwise stated.

sudo apt update && sudo apt upgrade -y

sudo apt install -y apt-transport-https curl#comment out the swap line!

sudo vim /etc/fstab# On controlplane

sudo hostnamectl set-hostname controlplane

# On Worknode1

sudo hostnamectl set-hostname Workernode1

# On Workernode2

sudo hostnamectl set-hostname Workernode2sudo tee -a /etc/hosts <<EOF

<Control-Plane-IP> controlplane

<Node1-IP> node1

<Node1-IP> node2

EOFcat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfiltercat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --systemsudo apt install -y containerd

sudo mkdir -p /etc/containerd

containerd config default | sudo tee /etc/containerd/config.tomlsudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/' /etc/containerd/config.toml

sudo systemctl restart containerd

sudo systemctl enable containerdsudo apt install -y apt-transport-https ca-certificates curl gpg

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.33/deb/Release.key | \

sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.33/deb/ /' | \

sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt update

sudo apt install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

sudo systemctl enable --now kubeletIf you made it this far with no errors fantastic. Now, this is the part of the build I took a snapshot of each of my VMs.

Now we can move forward with initialising our controlplane and setting our PodCIDR range (The IPAM range that is given to our Pods automagically when they’re created).

As per our design I am using 172.16.0.0/16 (Make sure this is different to our node range).

#ONLY CONFIGURED ON CONTROLPLANE

sudo kubeadm init --control-plane-endpoint=controlplane --pod-network-cidr=172.16.0.0/16

At this point there should be a kubeadm command *SIMILAR to the one below that has been auto created on screen, this command is used to onboard our Workernodes to our Controlplane (Master Node).

#KEEP IT SAFE DON'T USE IT YET ***

kubeadm join controlplane:6443 --token gwkrlz.o4l8cjbo0hfhz26p --discovery-token-ca-cert-hash sha256:9112382293a59406560ea944120c11b47631992ed06ff5d3bcc1548fce11deb1======================

#IF YOU LOSE IT GET ANOTHER ONE FROM CONTROL PLANE

kubeadm token create --print-join-command

Next we do some more linux commands….. chowned

#ONLY CONFIGURED ON CONTROLPLANE

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configNow we’re in a position to onboard the Workernodes. From each of the Worknodes enter the join command that was provided during your controlplane intialisation***

sudo kubeadm join controlplane:6443 --token gwkrlz.o4l8cjbo0hfhz26p --discovery-token-ca-cert-hash sha256:9112382293a59406560ea944120c11b47631992ed06ff5d3bcc1548fce11deb1======================We can now confirm our Workernodes have been onboarded to our controlplane

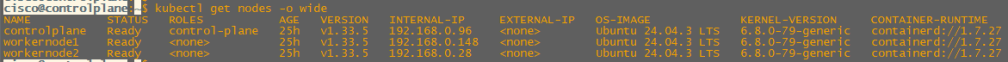

kubectl get nodes -o wide

Just to reiterate, i’m just starting out my journey in Kubernetes, the main focus for me with this lab is practicing Isovalent products. Although this has to involve learning some Kubernetes i’d be lost without beginners guides like this one: Kubernetes Cluster Setup on Ubuntu VMs (Home Lab Guide for Beginners) | by Rushain Sovis | Medium

The only difference here is the CNI used, I also installed the Cilium CNI after the Workernodes are onboarded, unlike the guide linked. See next page for the Cilium installation.